Remotion Popcorn Sound Visualizer

Creating a sound visualizer can add an engaging layer to your audio content, and Remotion provides a great toolkit for bringing this concept to life. In this tutorial, we'll walk through building a "popcorn" sound visualizer, named for its animation style where circles pop up and collide in sync with the audio. This project is a fun way to enhance music videos, podcasts, or any audio-driven content with dynamic visuals.

We'll go step-by-step through the process, covering audio sampling, visual effects, and how to implement collision logic for the popping circles. By the end of this article, you'll have a working sound visualizer that reacts to audio, creating a lively visual effect that matches the sound.

Please note that all videos in this article contain sound.

Setup

This project requires quite a bit of code, but we'll focus on the most important parts here. While we’ll show some function calls in the example, the full implementation and code details can be found in the repository. This way the code snippets are kept concise and simple.

First scene without animation

Similar to the animations introduction article, we'll begin by creating a static scene.

Keep in mind that the <Audio /> tag, which is required for sound playback, is placed in the root composition. The composition below is rendered within a full-sized <svg /> element featuring a gradient background. This means our code's main task is to render the <circle /> svg elements at specific positions with a defined radius.

export default () => {

// Retrieve the background color from a globally configured

// color palette in this example.

const { primary } = useColorPalette()

const { width, height } = useVideoConfig()

const circleCount = 19

// Create a couple circles evenly distributed horizontally

const circlePositions = createArrayOfSize(

circleCount,

).map((index) => {

const verticalOffset =

(index % 2 === 0 ? -1 : 1) * 10

const space = width / (circleCount + 1)

return {

x: (index + 1) * space,

y: height / 2 + verticalOffset,

}

})

return (

<>

{circlePositions.map(

(position, index) => {

return (

<circle

fill={primary}

cx={position.x}

cy={position.y}

key={index}

r={10} // A fixed radius for now

/>

)

},

)}

</>

)

}

Here's the current layout of the circles. Although the sound is playing, the circles remain static for now. We'll add sound-driven movement in the next step:

Adding sound effect

Just as it is shown in the audio visualization example in Remotion docs, it is fairly easy to get some animation going. We'll use the @remotion/media-utils package, which provides helpful functions for extracting audio data, and apply them here.

This example also utilizes custom Vector2 and Circle immutable classes, to make calculations easier and better structured compared to using plain JavaScript objects.

Another point to note is that while 32 samples are retrieved from the visualizeAudio function, there are only 19 circles. It's perfectly fine if the numbers don't match, as long as there are enough samples. We don't need to visualize all the extracted samples; we can simply focus on a subset of them.

export default () => {

// staticFile("/NeonNightsShort.m4a")

const musicUrl = sampleMusicUrl

const { primary } = useColorPalette()

const frame = useCurrentFrame()

const { fps, width, height } =

useVideoConfig()

const audioData = useAudioData(musicUrl)

if (!audioData) {

return null

}

const visualization = visualizeAudio({

fps,

frame,

audioData,

numberOfSamples: 32,

smoothing: true,

})

const circleCount = 19

// Create a couple circles evenly distributed horizontally

const circles = createArrayOfSize(

circleCount,

).map((index) => {

const verticalOffset =

(index % 2 === 0 ? -1 : 1) * 10

const space = width / (circleCount + 1)

// Keep the center position for now

const center = new Vector2(

(index + 1) * space,

height / 2 + verticalOffset,

)

// Adjust the radius based on the visualization.

// Multiply it by a value to make the circle larger.

const radius = visualization[index] * 200

// Use a custom Circle class

return new Circle(center, radius)

})

return (

<>

{circles.map((circle, index) => {

return (

<circle

key={index}

fill={primary}

cx={circle.center.x}

cy={circle.center.y}

r={circle.radius}

/>

)

})}

</>

)

}

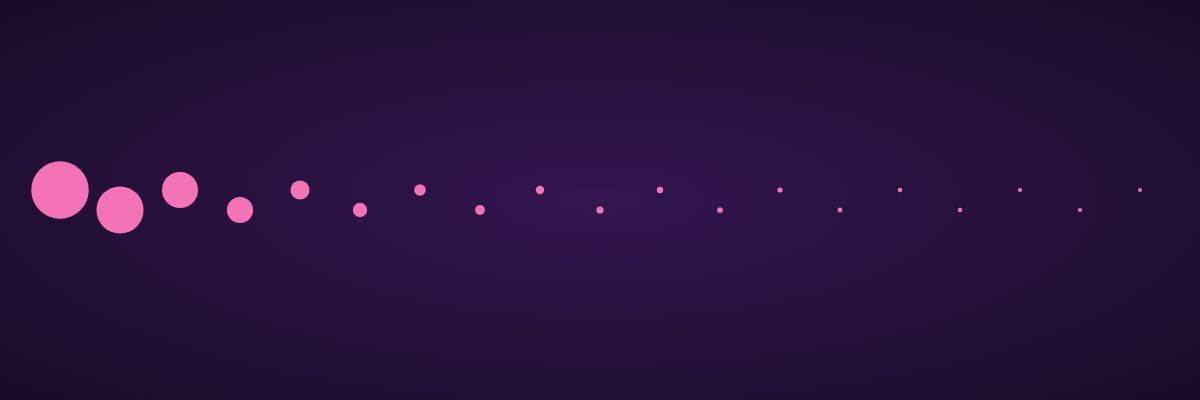

This is what the first the rendered code looks like:

There are a few issues with this video. The first problem is that only the far-left circle is moving, while the rest remain mostly static. The selected audio sample primarily contains lower-range sounds. To better capture this range, we can extract more samples from the audio data and focus on the lowest values to drive the visualization. This approach is effectively like "zooming in" on the range of samples.

export default () => {

// ... same code as before

const visualization = visualizeAudio({

fps,

frame,

audioData,

// Updated from 32 to 1024 (has to be a factor of two)

numberOfSamples: 1024,

smoothing: true,

})

// ...

}

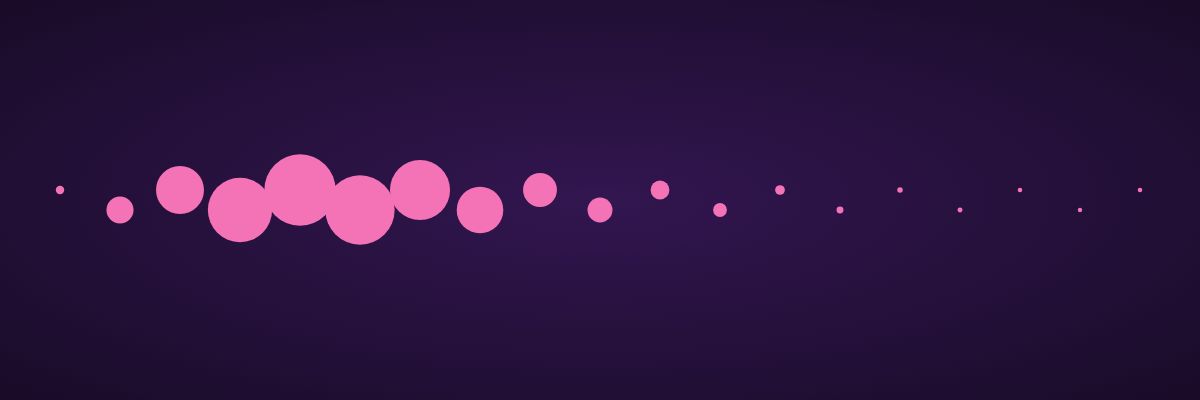

It is now more evenly distributed:

Introducing physics

This is where the fun begins. Currently, the circles overlap without any kind of interaction, which can be addressed by implementing collision detection.

Before we tackle collisions between all the circles, let's start with detecting and resolving the collision between just two circles. We'll add a static calculateCollision function to the Circle class to handle this.

The idea behind this calculation is that when two circles collide, they push each other apart until they are no longer overlapping. Their interaction is influenced by their sizes: a larger circle, having greater mass, will be pushed less when colliding with a smaller circle.

This is a simplified version of collision calculation. It doesn't account for factors like drag, friction, or momentum, but for our purposes, those details aren't important. (After all, how realistic is it for circles to change size?)

static calculateCollision(

a: Circle,

b: Circle,

): [Circle, Circle] {

const delta = b.center.subtract(a.center)

const distance = delta.length()

const combinedRadii = a.radius + b.radius

if (distance > combinedRadii) {

// Not colliding

return [a, b]

}

const overlap = combinedRadii - distance

const separationDirection =

delta.normalize()

const weightA = b.radius / combinedRadii

const weightB = a.radius / combinedRadii

const moveA =

separationDirection.multiply(

overlap * weightA,

)

const moveB =

separationDirection.multiply(

overlap * weightB,

)

const newPositionA =

a.center.subtract(moveA)

const newPositionB = b.center.add(moveB)

return [

a.setCenter(newPositionA),

b.setCenter(newPositionB),

]

}

Next, we'll implement collision handling for multiple circles. The approach is to calculate collisions between each pair of circles and adjust their positions accordingly. However, when two circles are repositioned due to a collision, one or both might end up overlapping with another circle. This means a single round of collision calculations is not sufficient, as the final placement could still result in overlaps.

To address this, we introduce an iterationCount parameter to the function. While a single iteration might leave some circles overlapping, additional iterations will gradually resolve these conflicts. It's important to find an optimal limit for iterationCount to keep the simulation efficient while still looking visually acceptable.

Additionally, we include an optional boundary check using the clampInRectangle function to ensure that circles remain within the screen's limits.

static calculateCollisionBetweenAll({

circles: input,

iterationCount = 1,

rectangleSize,

}: {

circles: Circle[]

iterationCount?: number

rectangleSize?: Vector2

}): Circle[] {

const circles = [...input]

for (

let iter = 0;

iter < iterationCount;

iter++

) {

for (

let i = 0;

i < circles.length;

i++

) {

for (

let j = i + 1;

j < circles.length;

j++

) {

const circleA = circles[i]

const circleB = circles[j]

const [

newCircleA,

newCircleB,

] = Circle.calculateCollision(

circleA,

circleB,

)

circles[i] = newCircleA

circles[j] = newCircleB

}

}

if (rectangleSize) {

for (

let i = 0;

i < circles.length;

i++

) {

circles[i] = circles[

i

].clampInRectangle(

rectangleSize,

)

}

}

}

return circles

}

The good news is that after implementing all this code, only a few minor adjustments are needed to make the physics work. We'll modify the radius calculation to make the visualizer's impact more pronounced, and then incorporate the collision handling to position the circles correctly.

let circles = createArrayOfSize(

circleCount,

).map((index) => {

// ... same code as before

const radius = visualization[index] * 600

return new Circle(center, radius)

})

// Add the collision:

circles = Circle.calculateCollisionBetweenAll(

{

// The circles are created the same way as in the previous example.

circles,

// How many iterations the collision will run.

// Less iteration means there can still be overlapping circles.

// More iteration is more resource-intensive.

iterationCount: 5,

rectangleSize: new Vector2(

width,

height,

),

},

)

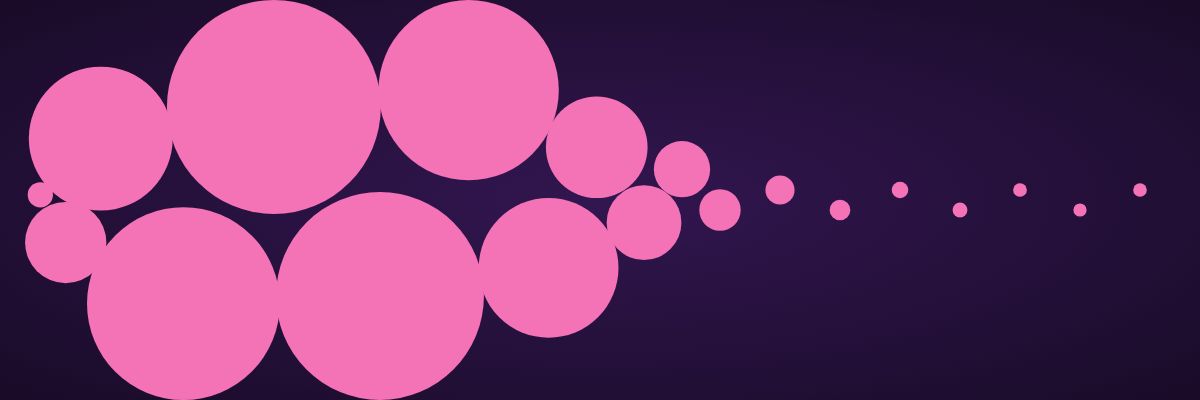

Finally, this is what the scene with colliding circles looks like:

Precalculated physics

Introducing the collision was a major step, but a few more adjustments is still to be done here. One thing to notice here is that the circles are changing size too rapidly between frames, even though the smoothing parameter was set to true in the visualizeAudio function. This smoothing only blends 3 frames, which doesn't seem to be enough to achieve a pleasing visual effect.

To address this, we need to blend more frames together. But how can we do this?

One option might be to sample previous audio values and blend them, but this approach won't produce a smooth animation. The circles collide unpredictably, and calculating their positions would require backtracking through the entire physics simulation from the beginning.

Instead, we need a way to maintain a state so that the circles can smoothly transition in size and position over multiple frames. Recalculating the entire simulation for each frame is impractical, as it would need to be repeated for consecutive frames. By frame 240 (4 seconds at 60fps), this would become extremely resource-intensive.

Although we mentioned in the animation intro that Remotion is focused on rendering at a given frame index, this applies only to the rendering logic. Fortunately, we can precompute the circles' positions in the calculateMetadata function and then use those precomputed values during frame rendering.

Let's introduce a type alias first:

/**

* An array that holds an array of circles for each frame.

* Should be indexed by the current frame to get an array of circles.

*/

export type CirclesInFrame = Circle[][]

Then create the calculateMetadata function:

export const calculatePopcornSoundVisualizerMetadata:

CalculateMetadataFunction<

PopcornSoundVisualizerCompositionProps

> = async ({ props }) => {

const fps = 60

const width = 1200

const height = 400

const durationInFrames = fps * 4

const screenSize = new Vector2(width, height)

const circlesInFrame = await getCircles({

scene: props.scene,

durationInFrames,

fps,

screenSize,

})

return {

fps,

width,

height,

durationInFrames,

props: {

...props,

calculated: circlesInFrame

? {

circlesInFrame,

}

: undefined,

} satisfies PopcornSoundVisualizerCompositionProps,

}

}

The getCircles function in calculatePopcornSoundVisualizerMetadata is responsible for calculating the circle positions for each frame, using a function that determines their actual positions based on some initial values. We'll omit the code for initializing the circle positions since it's identical to the previous examples and instead focus on the functions that set up the circles array.

The first function is a direct rewrite of the existing logic, but without smoothing applied. This serves to show what the updated code looks like and allows for a comparison with the previous version.

Essentially, the body of the for loop mirrors the current rendering logic:

export async function precalculateCirclesFirstVersion({

startPositions,

durationInFrames,

fps,

screenSize,

iterationCount,

multiplier,

}: {

startPositions: Vector2[]

durationInFrames: number

fps: number

screenSize?: Vector2

iterationCount: number

multiplier: number

}): Promise<CirclesInFrame> {

const audioData = await getAudioData(

sampleMusicUrl,

)

const result: CirclesInFrame = []

// Create an element for each frame.

for (

let frame = 0;

frame < durationInFrames;

frame++

) {

const visualization = visualizeAudio({

fps,

frame,

numberOfSamples: 1024,

audioData,

smoothing: true,

})

function getRadius(index: number) {

return (

visualization[index] * multiplier

)

}

let circles = startPositions.map(

(center, index) =>

new Circle(

center,

getRadius(index),

),

)

circles =

Circle.calculateCollisionBetweenAll({

circles,

iterationCount: iterationCount,

rectangleSize: screenSize,

})

result.push(circles)

}

return result

}

Finally, here is the component code. It's significantly simplified, as it is now only reduced to a straightforward indexing step:

export default ({

circles: circlesInFrame,

}: {

circles: CirclesInFrame

}) => {

const { primary } = useColorPalette()

const frame = useCurrentFrame()

const circles = circlesInFrame[frame]

return (

<>

{circles.map((circle, index) => {

return (

<circle

key={index}

fill={primary}

cx={circle.center.x}

cy={circle.center.y}

r={circle.radius}

/>

)

})}

</>

)

}

As expected we should be seeing almost the same output as in the previous scene:

Blending with the previous value

From this point, we only need to make a few adjustments to how the circles are precalculated. First, we can use the previous center and radius to gradually move them towards the target values. This target is what the values would be without any mixing, smoothing, or blending applied.

(In this context, "blend," "mix," and "smooth" are used interchangeably to describe the process of gradually transitioning a value towards a target. Whether it's blending, mixing, or smoothing, the idea is to avoid abrupt changes by adjusting the value in smaller steps over time.)

Additionally, we'll handle the radius and center separately during the blending process, allowing independent control through the positionBlend and radiusBlend parameters.

export async function precalculateCirclesBlended({

// Same parameters as previously

// Specify a "blend" value for both movement and radius.

// The value must be in the range [0, 1].

// A value of 1 means only the latest value is considered,

// ignoring the previous one.

// If the value is less than 1,

// the circle will gradually transition

// toward the target position,

// instead of instantly updating its size.

positionBlend = 0.6,

radiusBlend = 0.2,

}: {

positionBlend?: number

radiusBlend?: number

}): Promise<CirclesInFrame> {

const audioData = await getAudioData(

sampleMusicUrl,

)

const result: CirclesInFrame = []

for (

let frame = 0;

frame < durationInFrames;

frame++

) {

const visualization = visualizeAudio({

fps,

frame,

numberOfSamples: 1024,

audioData,

})

function getRadius(index: number) {

return (

visualization[index] * multiplier

)

}

if (frame === 0) {

const circles = startPositions.map(

(center) =>

// Initialize radius with zero

// so it does not get overblown

new Circle(

center,

0,

),

)

result.push(

Circle.calculateCollisionBetweenAll(

{

circles,

iterationCount:

iterationCount,

rectangleSize: screenSize,

},

),

)

continue

}

// The rest of the frames rely on the previous calculation.

const circles = result[frame - 1].map(

(circle, index) => {

const newPosition =

circle.center.mix(

startPositions[index],

positionBlend,

)

const newRadius = mix(

circle.radius,

getRadius(index),

radiusBlend,

)

return new Circle(

newPosition,

newRadius,

)

},

)

result.push(

Circle.calculateCollisionBetweenAll({

circles,

iterationCount: iterationCount,

rectangleSize: screenSize,

}),

)

}

return result

}

Finally, here is the rendered output:

We're almost done; just one final improvement is needed. The issue with blending is that sudden increases in volume, like a sharp beat, are not visible because they get smoothed out. To address this, we can make the smoothing of the circle's radius one-directional: it can increase instantly but will take time to shrink. This way, the visual effect is more closely aligned with the sound.

Here's the updated code in the calculateMetadata function:

const circles = result[frame - 1].map(

(circle, index) => {

const newPosition =

circle.center.mix(

startPositions[index],

positionBlend,

)

const targetRadius =

getRadius(index)

const currentRadius =

circle.radius

const newRadius =

// Grows immediately, shrinks gradually.

targetRadius > currentRadius

? targetRadius

: mix(

circle.radius,

getRadius(index),

radiusBlend,

)

return new Circle(

newPosition,

newRadius,

)

},

)

With this change, we arrive at the final output - the one featured at the start of the article:

Summary

In this article, we built a "popcorn" sound visualizer in Remotion, starting with static circle placement and gradually introducing collision physics. We adjusted the blending of circle movements to capture sudden changes in audio, implementing one-directional smoothing for a more synchronized effect. With these refinements, the final visualizer matched the audio more dynamically and accurately.

For the complete project setup and more resources, visit the GitHub repository.